On Press Freedom Day, the Media Council has issued a stern warning regarding the rising threat of AI-generated misinformation. As artificial intelligence becomes more sophisticated, the potential for spreading false information through AI-generated content is increasing, posing significant risks to media integrity and public trust.

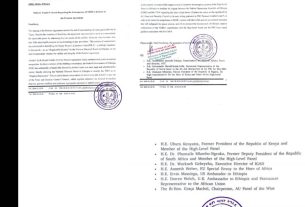

The council emphasized the urgent need for media outlets to verify the authenticity of news content before publication. “AI-generated misinformation can be difficult to detect, as it is often highly convincing,” said a spokesperson for the Media Council. “Journalists and news organizations must remain vigilant and implement robust fact-checking processes to maintain credibility and uphold press freedom.”

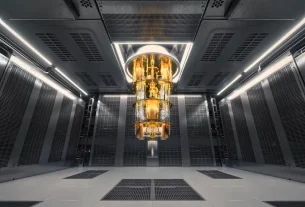

Misinformation generated by AI can take many forms, including deepfake videos, manipulated images, and fabricated news articles. Such content can rapidly go viral, misleading the public and potentially influencing public opinion, elections, and even international relations.

The Media Council also called on social media platforms to strengthen their AI detection systems to identify and remove false content swiftly. “Social media plays a critical role in news dissemination, but it also provides a fertile ground for misinformation to spread unchecked,” the spokesperson added.

In response to these concerns, the council is launching a series of workshops aimed at educating journalists and media professionals on identifying AI-generated content and implementing ethical guidelines for AI usage in news reporting.

As the global media landscape evolves, combating AI-generated misinformation will require a coordinated effort among media organizations, technology companies, and regulatory bodies. By promoting responsible AI usage and prioritizing fact-based journalism, the Media Council aims to protect the integrity of the press and safeguard public trust.